Monte Carlo Simulations are a series of experiments that help us understand the probability of different outcomes when the intervention of random variables is present. It’s a technique you can use to understand the impact of risk and uncertainty in prediction and forecasting models.

Although Monte Carlo Methods can be studied and applied in many ways, we’re going to focus on:

- What Monte Carlo Simulation/Method actually is, along with some examples and a few experiments.

- Simulating the game of Roulette with methods of Monte Carlo and inferential statistics.

- Understanding areas where it finds its application other than gambling.

Why should you learn about Monte Carlo Simulation?

Monte Carlo Simulations utilize something called the Monte Carlo Sampling technique, which is randomly sampling a probability distribution. We’re going to see a simulation based on this technique later in the article.

Monte Carlo simulations have a vast array of potential applications in fields like business and finance. Telecoms use them to assess network performance in different scenarios, helping them to optimize the network. Analysts use them to assess the risk that an entity will default, and to analyze derivatives such as options. Insurers and oil well drillers also use them. Monte Carlo simulations have countless applications outside of business and finance, such as in meteorology, astronomy, and particle physics. [2]

In machine learning, Monte Carlo methods provide the basis for resampling techniques like the bootstrap method for estimating a quantity, such as the accuracy of a model on a limited dataset.

The bootstrap method is a resampling technique used to estimate statistics on a population by sampling a dataset with replacement.

Some history of Monte Carlo Simulation

Stanislaw Ulam, a Polish-American mathematician was first credited to study the Monte Carlo Simulation. While playing the game of solitaire, he wondered what’s the probability of winning the game. So, he spent a lot of time trying to work the odds but failed. This is understandable since the game of solitaire has a large number of combinations to make a calculation from, that too by hand.

To tackle this he thought of going the experimental route, counting the number of hands won / number of hands played. But he had already played a lot of hands without a win, so it would take him years to play enough hands to actually get a good estimate. So instead of playing all the hands, he thought of simulating the game on the computer.

Back then, access to computers wasn’t easy as there weren’t many machines around. So, being influential as he was, contacted John von Neumann, a popular mathematician of that time, and using his ENIAC machine they were able to run the simulation of the solitaire game. And thus, Monte Carlo Simulation was born!

What is Monte Carlo Simulation?

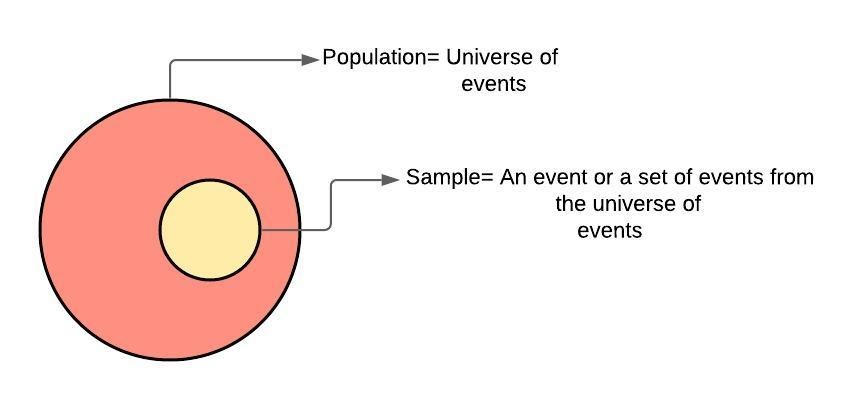

Monte Carlo Simulation is a method of estimating the value of an unknown quantity using the principles of inferential statistics. Inferential statistics corresponds to applying statistical algorithms on a sample/random variable, drawn from a sample that tends to exhibit the same properties as the population (from which it is drawn).

In the case of solitaire, the population is the universe of all possible games of solitaire which could be possibly played, and the sample is the games we played (>1). Now, the inferential statistics tell us that we can make inferences about the population based on the statistics we do on the sample.

Note: The statement of inferential statistics will only hold true when the sample is sampled randomly.

A major part of inferential statistics is decision-making. But to make a decision you must know the combinations of outcomes that might occur, and in what variation.

Let’s take an example to understand this better. Let’s say you’re given a coin and you have to estimate the fraction of heads you would get if you flipped the coin a certain number of times. How confident would you be to say that all flips will turn heads? Or heads will come up the (t+1)th time? Let’s weigh in some possibilities to make it interesting:

- Flipped a coin 2 times. Both were heads, will you weigh in this if you were to predict the outcome of the 3rd toss?

- What if there are 100 trials and all come up heads, will you feel more comfortable thinking 101st flip will be heads?

- Now, what if out of 100 trials, 52 came up heads? Will your best guess be 52/100?

For t=2 times you’d be less confident to predict the third one head, and for t=100 you’d be more confident to predict 101st flip to be heads when all are heads than the case where 52 flips were heads.

The answer to this is variance. The confidence in our estimate depends on two things:

- Size of the sample (100 vs 2)

- The variance of the sample (all heads vs 52 heads)

As the variance grows, we need larger samples to have the same degree of confidence. When almost half are heads and half are tails, the variance is high, but when the outcome is always heads, the variance is almost 0.

Let’s now see some experiments based on Monte Carlo methods in action.

Monte Carlo Simulation experiments

One of the most popular games that’s common among statisticians and gamblers is Roulette! Of course, for various different reasons, we’re gonna see the Monte Carlo simulation in action by running over the game of roulette.

To make this fun, we’re gonna divide this into 3 experiments influenced by each other:

- Fair Roulette: Expected value for the return of your bets should be 0%.

- European Roulette: Expected value for the return of your bets should be ~-2.7%

- American Roulette: Expected value for the return of your bets should be ~-5.5%.

Fair Roulette

Let’s define a simple python class to simulate the game:

import randomrandom.seed(0)class FairRoulette(): def __init__(self): self.pockets = [] for i in range(1,37): self.pockets.append(i) self.ball = None self.pocketOdds = len(self.pockets) - 1 def spin(self): self.ball = random.choice(self.pockets) def betPocket(self, pocket, amt): if str(pocket) == str(self.ball): return amt*self.pocketOdds else: return -amt def __str__(self): return 'Fair Roulette'

- There are 36 pockets numbered from 1–36

- Initially, the ball is at None. The wheel is spun and the ball lands on one of these pockets randomly

- If the pocket number on which the ball landed matches with the number you bet on priorly, you win the game. So if you bet $1 at the start and you win the hand then you end up with $36. Amazing, isn’t it?

Now a function to put all instructions and rules together:

def playRoulette(game, numSpins, pocket, bet, toPrint): totalPocket = 0 for i in range(numSpins): game.spin() totalPocket += game.betPocket(pocket, bet) if toPrint: print(f'{numSpins} spins of {game}') print(f'Expected return betting {pocket} = {str(100*totalPocket/numSpins)}% n') return (totalPocket/numSpins)

The code is pretty much self-explanatory. totalPocket is a variable that keeps the sum of the money you won/lost. numSpins is how many times you placed a bet or spun the wheel.

Now let’s run this thing for 100 and 1 million spins.

game = FairRoulette()for numSpins in (100, 1000000): for i in range(3): playRoulette(game, numSpins, 2, 1, True)

You can see in the image above

- For 100 spins you’ve got a 44% positive return followed by a negative 28%, and again a positive 44%, much higher variance but things get interesting when you look at the bigger picture.

- For a million spins the returns always hover around the mean 0 which is what we started with. The variance is low.

One must be wondering why bother with a million spins? A gambler might not, but a casino surely will. For a gambler, it’s harder to predict how much he’ll win or lose that day, given that he can play 100 games on that particular day. But a casino has to run for years with thousands of gamblers playing tens of millions of hands.

So what’s going on with the experiment is something called the law of large numbers. According to the law of large numbers:

the average of the results obtained from a large number of trials should be close to the expected value and will tend to become closer to the expected value as more trials are performed.

That means if we spun the roulette wheel an infinite number of times, the expected return would be zero. So we got the result much closer to zero when spun a million times than a hundred.

Let’s now run European and American roulette. You see, casinos are not in the business of being fair. After all, they have to stick up to the phrase “the house always wins!”. In the case of European roulette, they add an additional “0” to the wheel in green apart from the rest red/black, and in American roulette they sneak in another “00” in green, making the odds tougher for a gambler to win.

Let’s simulate both and compare them to the Fair roulette simulations.

class EuRoulette(FairRoulette): def __init__(self): FairRoulette.__init__(self) self.pockets.append('0') def __str__(self): return 'European Roulette'class AmRoulette(EuRoulette): def __init__(self): EuRoulette.__init__(self) self.pockets.append('00') def __str__(self): return 'American Roulette'

We just inherited the FairRoulette class, as the functions and attributes remain the same with additional pockets which we appended. Now a function to run the trials and save the returns:

def findPocketReturn(game, numTrials, trialSize, toPrint): pocketReturns = [] for t in range(numTrials): trialVals = playRoulette(game, trialSize, 2, 1, toPrint) pocketReturns.append(trialVals) return pocketReturns

In order to compare, we spin the wheel for all three and see the average returns for 20 trials of each.

numTrials = 20resultDict = {}games = (FairRoulette, EuRoulette, AmRoulette)for G in games: resultDict[G().__str__()] = []for numSpins in (1000, 10000, 100000, 1000000): print(f'nSimulate, {numTrials} trials of {numSpins} spins each') for G in games: pocketReturns = findPocketReturn(G(), numTrials, numSpins, False) expReturn = 100*sum(pocketReturns)/len(pocketReturns) print(f'Exp. return for {G()} = {str(round(expReturn, 4))}%')

Running the above excerpt will get you this:

You can see here that as we move closer to infinity, the return for each game gets closer to their expected value. So if you play long you are most likely to lose ~3% in European, and ~5% in American. Told you, casinos are in the business of sure shot profit.

Note: Whenever you’re sampling randomly, you aren’t guaranteed to get perfect accuracy. It’s always possible to get a weird sample and that’s one of the reasons we averaged over 20 trials.

You’ve reached the end!

You now understand what a Monte Carlo Simulation is and how to perform one. We only took the example of roulette, but you can experiment on other games of chances. Visit the notebook here to find the complete code in this blog and one bonus experiment we did for you!

Following are some additional research resources that you may want to follow:

- If you want to explore how Monte Carlo simulations are applied to technical systems then this book is a good starting point for it.

- For further reading about Stochastic thinking, you can see the lectures here.

- If you want to go into deep analysis you can view some latest research related to Monte Carlo simulation and methods here.

References

- Monte Carlo Simulation

- https://www.investopedia.com/terms/m/montecarlosimulation.asp

- https://en.wikipedia.org/wiki/Monte_Carlo_method

Was the article useful?

Thank you for your feedback!

More about Monte Carlo Simulation: A Hands-On Guide

Check out our product resources andrelated articles below:

Explore more content topics:

Computer Vision General ML Model Development ML Tools MLOps Natural Language Processing Reinforcement Learning Tabular Data Time Series

Insights, advice, suggestions, feedback and comments from experts

Monte Carlo Simulation

Monte Carlo Simulation is a technique used to understand the probability of different outcomes when random variables are involved. It is a method of estimating the value of an unknown quantity using the principles of inferential statistics. The technique involves running a series of experiments to simulate the behavior of a system or process and analyze the results to gain insights into the impact of risk and uncertainty. Monte Carlo simulations utilize the Monte Carlo sampling technique, which involves randomly sampling a probability distribution [[2]].

Applications of Monte Carlo Simulation

Monte Carlo simulations have a wide range of applications in various fields. In business and finance, they are used by telecom companies to assess network performance in different scenarios, helping them optimize their networks. Analysts use Monte Carlo simulations to assess the risk of an entity defaulting and to analyze derivatives such as options. Insurers and oil well drillers also use Monte Carlo simulations. Beyond business and finance, Monte Carlo simulations find applications in fields such as meteorology, astronomy, and particle physics [[2]].

In machine learning, Monte Carlo methods provide the basis for resampling techniques like the bootstrap method, which is used to estimate statistics on a population by sampling a dataset with replacement [[2]].

History of Monte Carlo Simulation

Monte Carlo Simulation was first credited to Stanislaw Ulam, a Polish-American mathematician. Ulam was trying to calculate the probability of winning a game of solitaire but found it difficult due to the large number of combinations involved. To tackle this problem, he thought of simulating the game on a computer. With the help of John von Neumann and the ENIAC machine, they were able to run the simulation and thus, Monte Carlo Simulation was born [[2]].

Example: Simulating the Game of Roulette

One popular application of Monte Carlo Simulation is simulating the game of roulette. The article provides an example of simulating fair roulette, European roulette, and American roulette using Monte Carlo methods.

In fair roulette, the expected value for the return of bets should be 0%. The simulation involves spinning the wheel and betting on a pocket. The simulation is run for different numbers of spins, and the returns are calculated. As the number of spins increases, the returns converge to the expected value of 0%.

In European roulette, an additional "0" pocket is added to the wheel, making the odds tougher for gamblers. The expected value for the return of bets in European roulette is approximately -2.7%.

In American roulette, an additional "00" pocket is added, further decreasing the odds for gamblers. The expected value for the return of bets in American roulette is approximately -5.5% [[2]].

Conclusion

Monte Carlo Simulation is a powerful technique for understanding the impact of risk and uncertainty in various scenarios. It has applications in fields such as business, finance, meteorology, astronomy, and particle physics. The technique is based on the principles of inferential statistics and involves running simulations to estimate unknown quantities. The example of simulating the game of roulette demonstrates how Monte Carlo methods can be used to analyze different scenarios and calculate expected returns.